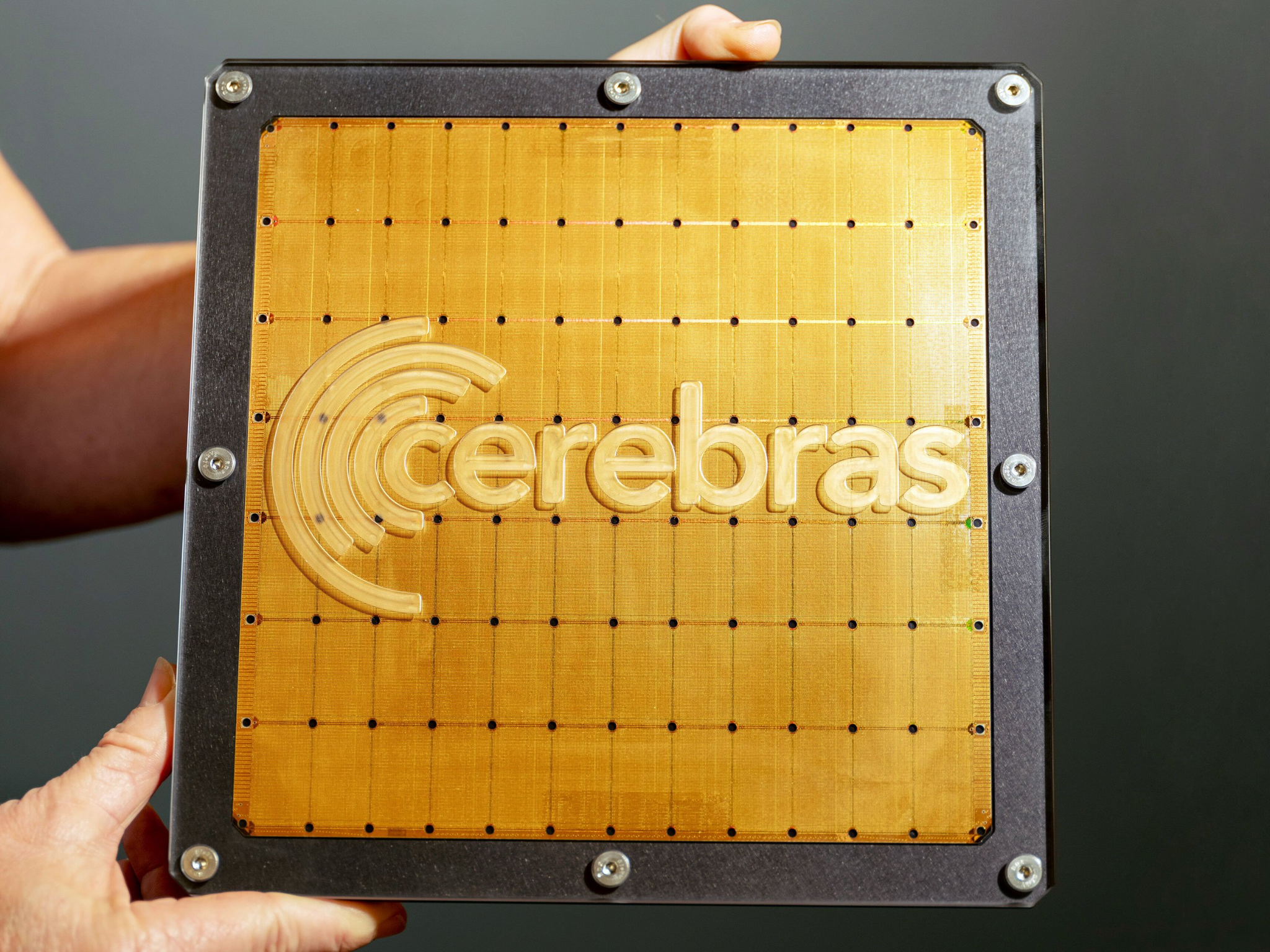

The Largest and Most Powerful Chip Ever Made: Cerebras Unveils a 46,225 mm² Chip with 1.2 Trillion Transistors and 400,000 Cores

Fiona Nanna, ForeMedia News

4 minutes read. Updated 7:07AM GMT Fri, 30August, 2024

In a monumental step for the field of artificial intelligence and computing, the startup Cerebras Systems has announced the creation of the largest and most powerful chip ever made. This revolutionary chip, known as the Wafer-Scale Engine 2 (WSE-2), measures a staggering 46,225 mm²—56 times larger than any other chip currently on the market. It boasts an unprecedented level of computational power, memory capacity, and communication bandwidth, setting a new benchmark in deep learning and neural network capabilities.

Cerebras Systems, a company based in San Francisco, California, is renowned for its cutting-edge developments in computer systems designed to accelerate deep learning. The WSE-2, equivalent in size to an iPad, is an exceptional feat of microengineering. It integrates an astonishing 1.2 trillion transistors, 400,000 programmable processor cores, and 18 gigabytes of on-chip memory. Such specifications dwarf conventional chips, placing Cerebras at the forefront of AI hardware innovation.

A Groundbreaking Feat in Chip Design

The creation of the WSE-2 required the use of an entire silicon wafer—a departure from traditional chip-making methods that use smaller, separate sections of a wafer. This approach allows Cerebras to harness an enormous amount of compute power in a single chip. The development process involved a significant investment of $112 million and the efforts of 173 highly skilled engineers. The result is a chip that is not only the largest ever made but also the most advanced in terms of capabilities.

The WSE-2’s design enables unparalleled computational density, which is crucial for the complex calculations needed in deep learning models. Traditional chips often face limitations due to their size and processing power, but Cerebras’ wafer-scale approach eliminates many of these constraints, offering a new horizon for AI and machine learning research.

Implications for the Future of Deep Learning

This breakthrough chip is poised to revolutionize the future of deep learning and neural networks. As AI systems become increasingly complex, they require vast amounts of data and computation to train effectively. The WSE-2’s ability to process massive datasets at unprecedented speeds makes it a game-changer for AI researchers and developers.

Moreover, the chip’s architecture allows for greater flexibility and scalability, enabling researchers to build and test more sophisticated neural network models. These advancements will likely lead to significant improvements in AI applications across various sectors, from healthcare and finance to autonomous driving and robotics.

A New Era in AI Hardware Innovation

Cerebras’ WSE-2 is not just a step forward for the company but a leap for the entire AI industry. Its capabilities have the potential to accelerate breakthroughs in natural language processing, image recognition, and other fields that rely heavily on deep learning. By providing a platform that offers more compute, memory, and communication bandwidth than any other chip, Cerebras is helping to pave the way for new innovations and applications that were previously thought unattainable.

For more on how Cerebras is reshaping AI hardware, visit the Cerebras Systems website. Learn more about the advancements in deep learning and how chips like the WSE-2 are pushing boundaries at TechCrunch and Wired.