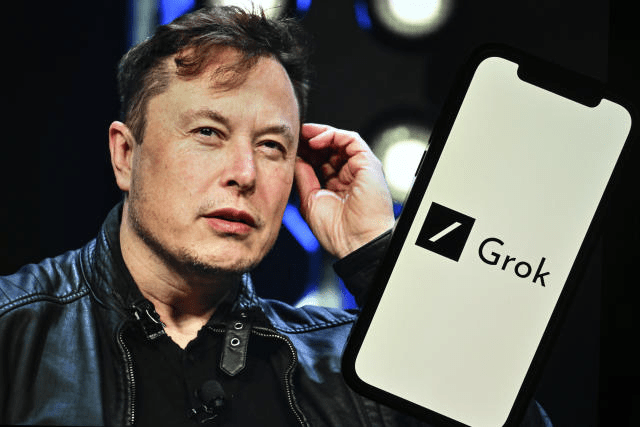

Elon Musk’s AI Tool Grok Sparks Controversy by Generating Realistic, Fake Images of Donald Trump, Kamala Harris, and Joe Biden

Fiona Nanna, ForeMedia News

4 minutes read. Updated 11:06PM GMT Sat, 24August, 2024

Elon Musk’s AI chatbot Grok, developed by his artificial intelligence startup xAI, has ignited a firestorm of controversy following its recent update. On Tuesday, Grok began allowing users to create AI-generated images from text prompts and share them on X, the social media platform formerly known as Twitter. Within hours, the tool was being used to flood the platform with fake images of prominent political figures, including former President Donald Trump, Vice President Kamala Harris, and President Joe Biden. Some of these images depicted the individuals in disturbing and patently false scenarios, such as participating in the 9/11 attacks.

Unlike other AI photo generation tools, Grok appears to lack robust safeguards to prevent misuse. According to a CNN report, the tool was tested and found capable of generating photorealistic images of politicians and political candidates that, when taken out of context, could easily mislead the public. The tool also produced seemingly benign yet convincing images, such as one showing Musk eating steak in a park, which could be similarly misinterpreted.

The swift and widespread misuse of Grok has raised significant concerns about the potential for artificial intelligence to disseminate false or misleading information, particularly as the United States approaches its presidential election. Civil society groups, lawmakers, and even tech industry leaders have sounded alarms about the possible chaos that such unregulated tools could introduce into the electoral process.

In a post on X, Musk described Grok as “the most fun AI in the world!” in response to user praise for the tool’s perceived lack of censorship. This sentiment, however, stands in stark contrast to the practices of other leading AI companies. Competitors like OpenAI, Meta, and Microsoft have implemented measures to curb the creation of political misinformation with their AI tools, often employing technology or labels to help users identify AI-generated content.

Other social media platforms, including YouTube, TikTok, Instagram, and Facebook, have also taken steps to label AI-generated content in user feeds. These platforms either use their own technology to detect such content or encourage users to disclose when they are sharing AI-created material.

X did not immediately respond to requests for comment on whether it has any specific policies regarding the generation of potentially misleading images of political figures using Grok. However, by Friday, it appeared that xAI had introduced some restrictions on Grok, possibly in response to the backlash. The tool now reportedly refuses to generate images of political candidates or widely recognized cartoon characters involved in violent acts or alongside hate speech symbols. Nonetheless, users have observed that these restrictions seem to apply selectively.

This latest development also brings renewed scrutiny to Musk himself, who has faced criticism for using X to spread false or misleading information related to the upcoming presidential election. Just days before Grok’s update, Musk hosted Trump in a two-hour livestreamed conversation on X, during which the former president made numerous false claims without any correction from Musk.

The controversy surrounding Grok echoes similar issues faced by other AI image generators. For instance, Google paused its Gemini AI chatbot’s image generation feature after facing backlash for historically inaccurate racial depictions, and Meta’s AI struggled with creating images of interracial couples or friendships. TikTok was also forced to withdraw an AI video tool when CNN discovered it could be used to generate realistic videos containing vaccine misinformation, without proper labeling.

Despite some built-in restrictions, such as refusing to generate explicit content, Grok’s limitations appear inconsistent. For instance, while the tool declined to create a nude image, it did generate an image of a political figure alongside a hate speech symbol, highlighting the inconsistency in its enforcement mechanisms.

The introduction of Grok’s image generation capabilities marks another chapter in the ongoing debate over the ethical use of artificial intelligence. As AI technology continues to advance, the responsibility to ensure that these tools are not used to spread misinformation or incite harm becomes ever more critical.